We spend considerable time studying and talking with leaders, teams, and investors about AI. What strikes us is not a lack of intelligence or determination to find ways to use it, but rather the difficulty of imagining (and therefore discussing) the longer-term consequences it might have for the business and the people working in it.

Most organisations are focused on addressing short-term problems: productivity gaps, operational inefficiencies, competitive pressures, cost control, and meeting quarterly financial targets. AI is primarily viewed through the same lens, with the promise of doing more with less.

That is understandable. When unpredictable change accelerates, and pressure and fear rise, attention narrows to immediate action (a bias) and control, forgetting what compounds over time: judgment, focus, learning, and human connection.

This article aims to broaden that frame again.

What the research is now confirming

Recent research from UC Berkeley tracked 200 employees over eight months to understand how generative AI changed their work habits. The findings, published this month in Harvard Business Review, confirm a pattern we’ve been observing:

AI doesn’t reduce work. It intensifies it.

Workers expand their scope of work, absorb responsibilities that previously belonged to others, and blur the boundaries between work and non-work. They enthusiastically multitask, feeling productive while the “brain cost” accumulates, often not realizing it until it is too late.

The result? As one engineer summarized, “You had thought that maybe you could work less. But then, really, you don’t work less. You just work the same amount or even more.” We’ve seen this before: new (tech) tools don’t give time back; they raise the ceiling on what gets expected.

The researchers found this led to workload creep, cognitive fatigue, burnout, and weakened decision-making. The initial (and voluntary!) productivity surge gave way to lower-quality work and turnover.

This pattern took 8 months of research to detect, which tells you something. Organizations won't notice the damage until much later, when it has already accumulated.

You can't infuse intelligence without building capacity

What makes this research revealing is what it exposes about partial solutions.

The researchers recommend an “AI practice”—intentional pauses, sequencing work, and adding human grounding. These are helpful interventions. But they address symptoms, not causes.

What’s actually happening?

- When we expand task scope without the domain expertise to verify quality, we create speed without substance.

- When we blur work boundaries without sufficient sustained attention, multitasking replaces focus, and cognitive load accumulates invisibly.

- When we lose the human judgment about when to use AI and when a direct human connection is essential, we become isolated from colleagues and disconnected from our own work.

Each form of intensification reveals a missing human capability.

Pure technical training creates speed without the verification ability to ensure quality. Pure workflow redesign produces an architecture that operators can’t meaningfully execute. Pure mindset work builds openness without the technical or domain capability to act on it.

Each intervention makes local sense. Each creates new vulnerabilities and problems when applied in isolation.

This is the long-term risk: organisations and their workforces succeed with AI in the short term while slowly becoming less capable, more dependent, and more isolated.

The interconnected nature of readiness

Human readiness for AI isn’t a checklist of separate skills. It’s a living system where everything depends on everything else.

- You can’t use AI tools effectively without sustained attention for meaningful collaboration.

- You can’t collaborate meaningfully without domain expertise to guide and validate what AI produces.

- You can’t guide effectively without the ability to verify outputs and spot when things go wrong.

- You can’t do any of this without understanding when AI should be used—and when human judgment must take the lead.

And none of it develops without genuine curiosity about what’s possible.

When these dimensions are strengthened together, they reinforce one another. Technical skill enables deeper domain work. Deeper attention reveals more nuanced insights. Better verification builds confidence for exploration. Stronger judgment creates space for effective partnership.

When you develop them separately, they create new vulnerabilities. Speed without verification. Attention without expertise. Tools without judgment. Curiosity without capability.

This is why the “AI practice” recommendations—while helpful—don’t address the root cause. The interventions assume capabilities that the intensification itself erodes.

For organizations, this means developing capabilities systematically across teams. For individuals, this means taking responsibility for building your own resilient practice—because waiting for others to provide it won’t work.

This is why we started futurebraining

This system’s view shapes everything we do. We don’t predict which/when agentic workflows will prevail (no one knows). We don’t design automation strategies. We don’t teach people how to prompt faster.

Our work is preparing people and leadership teams to operate responsibly within whatever systems emerge—without becoming dumber, disconnected, or dependent in the process.

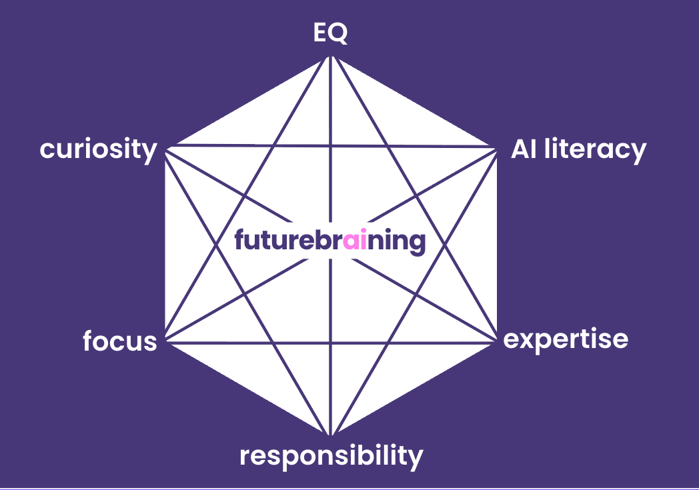

We focus on five interconnected human capabilities: expertise, focus, responsibility, emotional intelligence, and AI-literacy, all powered by curiosity.

Not as separate initiatives. As one integrated system. We work on the full picture—because partial solutions, no matter how well-intentioned, create the very problems they’re meant to solve.

Is our system perfect? No. It's a work in progress in a world that's just beginning to understand what it really means to infuse people with artificial intelligence. But we're building it with intention, adjusting as we learn, and grounding it in what we observe happening to real people and real organizations.

The long game

In this incredibly complex landscape, every vendor will try to convince you that their area is the central problem—the one thing that needs solving, after which everything else will fall into place.

- The AI platform vendors will tell you it’s about the right tools and infrastructure.

- The workflow consultants will tell you it’s about process redesign and agentic architecture.

- The training providers will tell you it’s about upskilling and prompt engineering.

- The change management firms will tell you it’s about mindset and culture.

Each is partly right. Each is selling a fragment.

The organisations and individuals that will succeed in the long term are those that take a systems view.

Not because systems thinking sounds sophisticated, but because human readiness actually operates as a system. When you develop all five capabilities together, you build a workforce that becomes smarter, more independent, and more connected across the organisation. That is the opposite of what intensification creates: dumber, dependent, and isolated.

AI systems will keep improving. They will become more autonomous, more persuasive, more embedded in everyday work. Human systems do not automatically improve alongside them.

The UC Berkeley research shows what happens when organisations allow AI to reshape work without intention: voluntary intensification that appears productive until it becomes unsustainable.

Organisations that treat AI as a technical rollout accumulate hidden people debt they will have to pay, including interest. The future will not be won by those who automate the fastest. It will be shaped by those who can use AI while staying smarter, more independent, and more connected.

That’s the long game.

And that’s what gets us up in the morning!

Therefore, over the coming weeks, we'll be breaking down each capability, sharing practical tools, and showing how individuals and organizations can build this resilient system for themselves.

%20(2).png?width=300&height=100&name=fb%20logo(300%20x%20100%20px)%20(2).png)